The process of developing and optimising a regression model requires, almost invariably, a sequence of steps. These steps can be combined in a single predictor using the Pipeline function of scikit-learn.

In this short post, we are going to discuss two simple examples of applying a Pipeline for the optimisation of common regression models used in spectroscopy:

- Principal Component Regression (PCR)

- Partial Least Square Regression (PLSR)

If you’d like a refresher on these topics, take a look at our introductory posts on PCR and PLSR, or browse through all the posts using regression.

Pipeline PCR

Principal Components Regression is the combination of two steps:

- Principal Component Analysis (PCA), which is the decomposition of the spectra into a new set of variables chosen as to maximise the variance of the data. An introduction to PCA is available here, and many resources are available on the web (I have a full lecture on PCA in our Patreon page, for subscribers).

- Linear Regression on the selected number of principal components.

This structure can be easily implemented into a scikit-learn Pipeline, and here’s how. Let’s start by importing the required libraries.

|

1 2 3 4 5 6 7 8 9 |

import pandas as pd import numpy as np from scipy.signal import savgol_filter from sklearn.preprocessing import StandardScaler from sklearn.decomposition import PCA from sklearn.linear_model import LinearRegression from sklearn.pipeline import Pipeline from sklearn.model_selection import GridSearchCV |

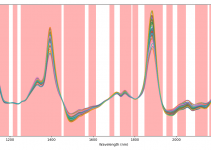

Secondly, let’s import the data and preprocess it. The data is taken directly from our GitHub repo

|

1 2 3 4 5 6 7 8 9 |

# Read data url = 'https://raw.githubusercontent.com/nevernervous78/nirpyresearch/master/data/peach_spectra_brix.csv' data = pd.read_csv(url) # Define arrays X = data.values[:,1:].astype("float32") y = data["Brix"].values.astype("float32") # Calculate first derivative X1 = savgol_filter(X, 21, polyorder = 2, deriv=1) |

Note that, in the last line, we calculate the first derivative and smooth the data with the Savitzky-Golay method. In principle, this step could also be included in the pipeline. That would require writing a custom class, which is outside the aim of this introductory tutorial.

Now we can define the pipeline as follows:

|

1 |

pcr_pipe = Pipeline([('scaler', StandardScaler()), ('pca', PCA() ), ('linear_regression', LinearRegression())]) |

The pipeline is composed of three functions: 1) Standard Scaler, 2) PCA decomposition and 3) Linear regression. The pipeline so designed will act like any other predictor, whereby the functions are applied in the order they are defined in the list.

In order to achieve the best performance in PCR however, we would like to evaluate what is the optimal number of principal components to select. The PCA procedure decomposes the data into a reduced array whose size is given by the number of samples, or the number of wavelength bands (whichever is smaller). Usually however, only a fairly limited number of principal components contains useful information for the model we want to develop. Therefore one can ignore principal components above a certain optimal value.

To evaluate this optimal value we can run a cross-validation experiment by changing the relevant parameter in this way

|

1 2 3 4 5 6 7 8 9 |

# Define the parameter to vary. Here's the number of PC parameters = {'pca__n_components':np.arange(1,31,1)} # Use GridSearchCV to find the optimal parameter(s) in cross-validation pcr = GridSearchCV(pcr_pipe, parameters, scoring = 'neg_mean_squared_error') # Fit the instance pcr.fit(X1, y) # Print the result print(pcr.best_estimator_) |

The ‘scoring’ parameter in GridSearchCV specifies what type of metric we want to optimise. In this case, we chose the usual Mean-Squared Error (MSE). Fitting the estimator means calculating the MSE in cross-validation for each parameter, as defined in line 2 (i.e. for a number of principal components ranging from 1 to 31 in steps of 1). Once the estimator is fitted we can print the result. The output is our case reads

|

1 2 |

Pipeline(steps=[('scaler', StandardScaler()), ('pca', PCA(n_components=6)), ('linear_regression', LinearRegression())]) |

The cross-validation procedure has established that 6 is the number of principal components that minimises the MSE. Now, to fit the estimator to the training set (note: here I’m using the same set of data for the sake of illustration, but you’ve got the drift), you simply have to type

|

1 |

pcr.best_estimator_.fit(X1,y) |

That’s it. The pcr.best_estimator_ instance (i.e. the optimised pipeline) works just as any other estimator. In particular it has the “fit” and “predict” functions we know and love.

Pipeline PLSR

The previous example can be replicated to define a Partial Least Square Regression model and optimise the number of latent variables used by the regressor. The code is

|

1 2 3 4 5 6 7 8 9 |

from sklearn.cross_decomposition import PLSRegression from sklearn.preprocessing import MinMaxScaler # Define the parameter to vary. Here's the number of PC parameters_pls = {'pls__n_components':np.arange(1,16,1)} plsr_pipe = Pipeline([('scaler', MinMaxScaler()), ('pls', PLSRegression())]) plsr = GridSearchCV(plsr_pipe, parameters_pls, scoring = 'neg_mean_squared_error') plsr.fit(X1, y) print(plsr.best_estimator_) |

In this case we are chaining a MinMax Scaler and the PLS Regression instance (Note that fitting a PLSR instance with scikit-learn does apply a Standard Scaler by default, hence adding a Standard Scaler to the pipeline would have been redundant.

All the other commands are the same as the previous case. Here, the GridSearchCV fit will return the optimal number of latent variables for the PLS Regression.

That’s it for today. As always, thanks for reading and don’t forget to share this content with friends and colleagues!

Until next time.

Daniel