Welcome to the second instalment of a series of posts introducing deep neural networks (DNN) for spectral data regression. In the first post we gave a bare-bone code to get you started with neural network training using Tensorflow and Keras on sample NIR data. From this post and in the next few, the topic will be understanding the meaning of the main parameters required to design and train a neural network, and a few practical tips to do that. A subsequent series of posts will deal with introduction of Convolutional Neural Networks and their training.

The topic of today is the activation function. We’ll discuss what an activation function is, a few examples, and provide sample code to train a neural network for spectral data analysis with different activation functions.

As for most of the posts in this blog, the aim of this tutorial post is to provide the basic information you may need started in your journey. Useful background reading is

- An earlier post on Binary classification of spectra with a single perceptron.

- The first post of this short series: Deep neural networks for spectral data regression with TensorFlow.

The main parameters of a neural network and their meaning

If you are familiar with traditional regression techniques, one of the thing you soon realise when learning deep learning is the large amount of parameters that need to be specified in a neural network. How do we go about finding the optimal settings? Truth is that optimising the parameters is more a craft than a science. The optimal configuration (assuming one can find such a thing) is very dependent on the type of problem at hand. Having said that however, some good practices have been developed by the community over the years, that are a very good starting point for our parameter space exploration.

Our aim in the next few posts will be to list the main parameters and explain their meaning and significance. I’m very firm in the opinion that I can’t optimise what I don’t (yet) understand, and therefore it’s always a good practice to invest some time in understanding the meaning of the various settings or functions, before starting tinkering with them. Here’s the list of the parameters we’ll discuss:

- The activation function

- The optimiser

- The learning rate (and the learning schedule)

- The network architecture

Today we’ll be focusing on the activation function.

The activation function

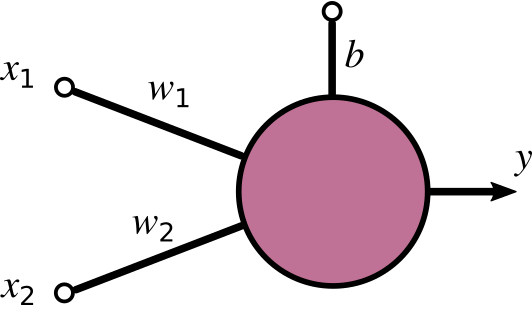

The activation function is the basic feature of each neuron in a neural network. As we’ve explained in our perceptron post, a neuron (or perceptron) is a mathematical function y = f(x) that takes an input x and produces the output is y. The neuron is characterised by a series of inputs, a set of weights and a bias. In the example below the neuron has two inputs (x_{1} and x_{2}), two weights (w_{1} and w_{2}), and the bias b.

This mathematical function is a specific combination of two operations.

The first operation is the dot product of input and weight plus the bias: a = \mathbf{x} \cdot \mathbf{w} + b= x_{1}w_{1} + x_{2}w_{2} +b. This operation yields what is called the activation of the perceptron (we called it a), which is a single numerical value.

The second operation is a non-linear step applied to the output a, This second operation is called activation function and is the parameter amenable to tuning. If you take a look at the basic code defining a fully-connected neural network (again, if you haven’t yet, please consider reading the first post of this series, where these steps are first laid out), the activation function is the string ‘relu’.

|

1 2 3 4 5 6 7 8 |

model = tf.keras.Sequential([ tf.keras.layers.Dense(256, activation='relu'), tf.keras.layers.Dense(128, activation='relu'), tf.keras.layers.Dense(64, activation='relu'), tf.keras.layers.Dense(32, activation='relu'), tf.keras.layers.Dense(16, activation='relu'), tf.keras.layers.Dense(4, activation='relu'), tf.keras.layers.Dense(1, activation="linear")]) |

ReLU, aka Rectifier Linear Unit, is arguably the most popular in modern neural networks, but it’s not the only choice. In our post on binary classification with a perceptron we used a logistic (sigmoid) function. The full list of activation functions you can use with Tensorflow is available here and it includes functions such as the sigmoid and the hyperbolic tangent. All these functions have in common (with the exception of the “linear” activation function which we’ll discuss in a minute) is a non-linear transfer between input and output. Logistic or hyperbolic tangent have the typical “S-curve” shape which you can plot for yourself using the code below

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

import numpy as np import matplotlib.pyplot as plt def sigmoid ( x ): f = 1.0 / ( 1.0 + np.exp ( - x ) ) ; return f x = np.arange(-10,10,0.1) plt.plot(x, sigmoid(x), label = "Sigmoid") plt.plot(x, np.tanh(x), label="Hyperbolic Tangent") plt.legend() plt.xlabel("$x$") plt.show() |

The code generates this image

showing a sigmoid (curve limited between 0 and 1, and an hyperbolic tangent (limited between -1 and 1). In this examples the variable x goes from -10 to 10. Both functions acts essentially as thresholds: once the input is above a certain value the activation function “switches on”, i.e. there is an output from the perceptron. Functions such as S-curves used to be popular in the past, but suffer from a common problem. See how the functions are essentially flat for low and high values of x? If x changes from 5 to 6, for instance, both activation functions barely change at all. In technical terms these functions “saturate”, that is the have large regions in which they have no sensitivity to changing inputs.

There is another problem with this sort of activation functions that manifest itself when applied to neurons in inner layers of a deep network. It’s called the “vanishing gradient problem” and it’s connected to the way a deep network is trained. In a very simple way, training involves changing the weights of each perceptron by a quantity that is proportional to the gradient (i.e. the derivative, if we were in one dimension) of the cost function. This is the basic of the gradient descent method, which we introduced in our post on classification with a single perceptron.

The gradient at each layer is effectively obtained by the chain rule which implies multiplying partial derivatives of the layers that precedes (or follow) the layer in question. Since S-curves like the logistic of the hyperbolic tangent have gradients in the range [0,1], multiplication of these small numbers may lead to vanishingly small gradients in deep layers. In practice this has the effect to slow down (or inhibit altogether) the ability of a network to train effectively. A through discussion of these problems can be found in [] and [] (see links at the end of the post).

That brings us back to the Rectified Linear Unit (ReLU). The ReLU activation functions is linear for positive inputs and is zero for negative inputs. It can be generated and visualised with the simple code below

|

1 2 3 4 5 6 7 8 9 10 11 |

import numpy as np import matplotlib.pyplot as plt def relu(x): return np.maximum(0,x) x = np.arange(-10,10,0.1) plt.plot(x, relu(x), label = "ReLU") plt.legend() plt.xlabel("$x$") plt.show() |

which produces the following plot

The ReLU activation function is obviously non-linear: it has a discontinuity at zero, which means that the perceptron “switches on” only for positive values. Since the ReLU is a linear function for positive values of the input, its gradient will be constant regardless of the input value (if positive). Hence, the problem of vanishing gradient is circumvented. This is the main reason that has brought the ReLU function to the fore in recent years, and it is today considered a de facto standard in most instances.

The ReLU activation function is obviously non-linear: it has a discontinuity at zero, which means that the perceptron “switches on” only for positive values. Since the ReLU is a linear function for positive values of the input, its gradient will be constant regardless of the input value (if positive). Hence, the problem of vanishing gradient is circumvented. This is the main reason that has brought the ReLU function to the fore in recent years, and it is today considered a de facto standard in most instances.

Before closing this section, let’s take a look back at the basic neural network architecture written above. You might have noticed that the activation function of the last layer is set to the string ‘linear’. A linear activation function is effectively a pass-through function. It just reproduces the input. This is the function required in the last layer of the network when we are dealing with a regression problem (as opposed to a classification problem). The output of a regression (just like a prediction of a linear fit) is a single number. Therefore the last layer of a deep network for regression must have a single neuron and its output must be reproduced at the exit.

As an aside the “linear” activation function is the default for a dense layer. Strictly speaking then, we would not need to specify it, but I have done it in the example above for the sake of clarity.

Training comparison: ReLU versus sigmoid

In order to get a practical feel for the topic discussed here, let’s compare the same neural network, trained on the same dataset, by only changing the type of activation function. Here’s the entire code.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 |

import numpy as np import matplotlib.pyplot as plt import pandas as pd from sklearn.preprocessing import MinMaxScaler import tensorflow as tf def basic_model(activation_function, lr = 0.0001): model = tf.keras.Sequential([ tf.keras.layers.Dense(256, activation=activation_function), tf.keras.layers.Dense(128, activation=activation_function), tf.keras.layers.Dense(64, activation=activation_function), tf.keras.layers.Dense(32, activation=activation_function), tf.keras.layers.Dense(16, activation=activation_function), tf.keras.layers.Dense(4, activation=activation_function), tf.keras.layers.Dense(1, activation="linear")]) model.compile(loss='mean_absolute_error', optimizer=tf.keras.optimizers.Adam(learning_rate=lr)) return model data = pd.read_excel("File_S1.xlsx") X = data.values[1:,8:].astype('float32') y = data["TOC"].values[1:].astype("float32") X_train, X_test, y_train, y_test = train_test_split(X, y,test_size=0.4,random_state=79) scaler = MinMaxScaler() scaler.fit(X_train) Xs_train = scaler.transform(X_train) Xs_test = scaler.transform(X_test) model_relu = basic_model(activation_function = 'relu') history_relu = model_relu.fit(Xs_train, y_train, epochs=1000, \ steps_per_epoch = 10, \ validation_data=(Xs_test, y_test), \ verbose=1) model_sigm = basic_model(activation_function = 'sigmoid') history_sigm = model_sigm.fit(Xs_train, y_train, epochs=1000, \ steps_per_epoch = 10, \ validation_data=(Xs_test, y_test), \ verbose=1) with plt.style.context(('seaborn-whitegrid')): fig, ax = plt.subplots(figsize=(8, 6)) ax.plot(history_relu.history['val_loss'], linewidth=2, label='Validation Loss, ReLU') ax.plot(history_sigm.history['val_loss'], linewidth=2, label='Validation Loss, Sigmoid') plt.xticks(fontsize=18) plt.yticks(fontsize=18) ax.set_xlabel('Number of epochs',fontsize=18) ax.set_ylabel('MAE',fontsize=18) plt.legend(fontsize=18) plt.tight_layout() plt.show() |

Most of this code is identical to what we discussed in the previous post, so I won’t go into it again. The main difference is that we brought the model definition into an independent function, which allows us to pass the activation function as a parameter. The rest is fairly straightforward: we define two networks which are identical except for the activation function and we train them for 1000 epochs on the same data.

This is the plot generated in my case.

Your output may be slightly different owing to a different initialisation of the neural network weights.

Note how the ReLU activation function leads to a much quicker training of the network. After 1000 epochs, the training with ReLU has substantially convergence, while the same process with the sigmoid is still well on the way.

Conclusions and References

The aim of this post was to introduce the activation function in a perceptron and, by extension, in a neural network. We discussed a few examples of activation functions and shown a comparison between training a neural network with a rectified linear unit as opposed to a sigmoid activation function.

For reference, here’s the full list of posts (published so far) dedicated to neural network for spectral data processing with Tensorflow.

- Deep neural networks for spectral data regression with TensorFlow.

- Understanding neural network parameters with TensorFlow in Python: the activation function (this one).

There are a large number of excellent resources out there to learn more about neural networks. Relevant for this post are

- J. Brownlee, How to Choose an Activation Function for Deep Learning.

- J. Brownlee, A Gentle Introduction to the Rectified Linear Unit (ReLU).

- The TensorFlow Python library.

- The Tensorflow regression tutorial.

- F. Chollet, Deep learning with Python, second edition. Simon and Schuster, 2021.

- The dataset is taken from the publicly available data associated with the paper by J. Wenjun, S. Zhou, H. Jingyi, and L Shuo, In Situ Measurement of Some Soil Properties in Paddy Soil Using Visible and Near-Infrared Spectroscopy, PLOS ONE 11, e0159785.

- The featured image photo by Lukas from Pexels.

Thanks for reading and until next time,

Daniel